This means that GPT-3 is over 100 times larger than GPT-2 in terms of the number of parameters. GPT-3 is a much larger model than GPT-2, with 175 billion parameters compared to GPT-2's 1.5 billion parameters. Let's start by estimating the FLOPS and training data required for GPT-3: The computational capabilities of supercomputers available in different years.The amount of training data required to train GPT-3.The number of floating-point operations per second (FLOPS) required to train GPT-3.To estimate the year in which GPT-3 could have been trained, we need to consider several factors: Estimate how many flops and training data gpt-3 needed to train, and then determine when there was a supercomputer large enough to do it within a few months. It might have been possible to collect that much data from the web at that time.īased on this article's logic, I want you to estimate what year GPT-3 would have been possible. He estimates that gpt-2 used 10^21 flops and about 40GB of training data. Hopefully an export feature will get added.Ī recent article argues that it would have been possible to train GPT-2 in 2005, using the bluegene/l supercomputer. Note that it took me more time to format this conversation for the post than the time it took to have the conversation itself. Also, I will not attempt to fix math mistakes or comment on them, but feel free to do the math "manually" and let me know if ChatGPT got it right. I did not fix my own spelling mistakes and grammatical errors, so you can see the real prompts that ChatGPT is replying too.

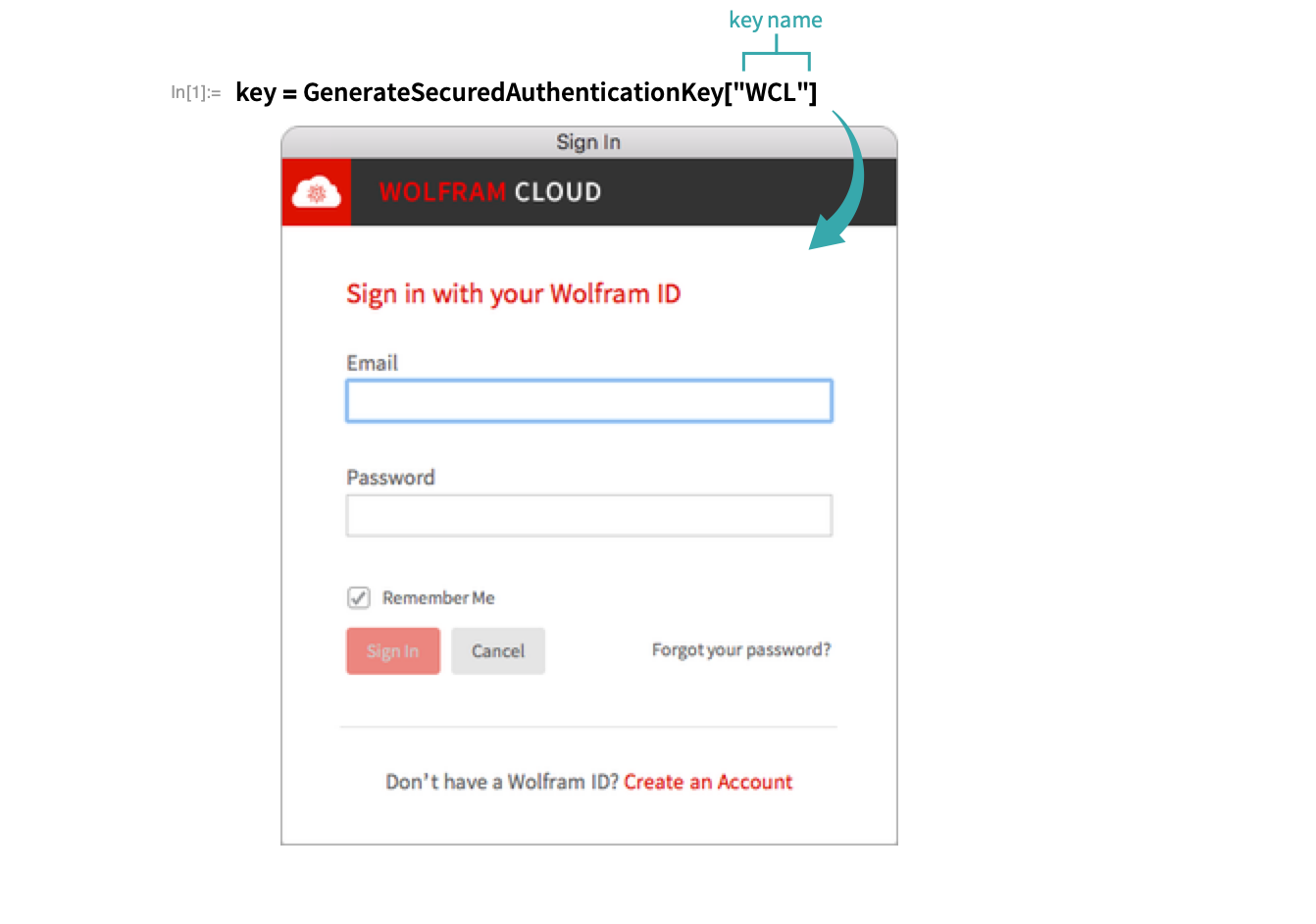

At one point it even gave up and did the math "manually" (meaning by itself.) It's possible that it learned how to do this from OpenAI engineers with clever prompting and training data, but it still shows some agency and fluid intelligence.Įverything said by ChatGPT is quoted as follows:Įverything unquoted is me. The most startling thing I saw in this conversation was that ChatGPT actually tried to recover from its own errors. It got an error message almost every time it tried to use the plugin. However, don't get too excited, as ChatGPT doesn't seem to know how to use its new toy. This new plugin can (attempt to) do math and even (attempt to) look up statistics, such as the FLOP/s of the most powerful supercomputers from the TOP500 list. Today I had the following conversation, on the same topic, with ChatGPT (GPT-4) using the new Wolfram Alpha plugin. Yesterday I read this blog post which speculated on the possibility of training GPT-2 in the year 2005, using a supercomputer.

0 kommentar(er)

0 kommentar(er)